Artificial Intelligence and Education: a ‘Moonshot’ Investment for the future?

Mar 28, 2024

The recent publication of a memoir by Dr. Li Feifei, inaugural Sequoia Professor in the Computer Science Department at Stanford University, and Co-Director of Stanford’s Human-Centered AI Institute (HAI), generated a flurry of media commentary on the current state of play in the field of Artificial Intelligence (AI). Invoking the memorable language of John F. Kennedy in 1961 at the launch of the US effort to land a human on the moon by the end of the 1960s, Prof. Li, known in the media as the ‘Godmother’ of AI, called for a similar quantum of strategic national investment in terms of both finances and effort to forge ahead in the field of AI. For both President Kennedy and Prof. Li, the enormity of the challenge to be met, be it space travel or AI, transcends existing capabilities and resources, requiring total commitment at a national level.

Reference: Bloomberg

There are significant differences in these two undertakings. The ‘moonshot’ of the 1960s was a physical ‘point-to-point’ challenge, drawing on the efforts of just one nation. In the 21st century, we face an adversary that is more virtual than physical, one that has infiltrated our daily lives, encircles the globe without regard for borders or barriers, knows no limits, yet impacts every member of the human race. Prof. Li’s technological ‘call to arms’ comes at an important juncture in human history, as AI promises either a ‘golden age’ or chaos for humankind.

Before considering the challenge posed by AI in the present and future, it is worthwhile to reflect briefly on the historical background to this emergent area of human endeavor.

The history of intelligence in artificial form stretches back to the myths and legends of antiquity. Legends abound in many cultures about automated artefacts that could mimic the capabilities of animals or even humans. A programmable machine that could produce musical notes was reportedly designed and built in the 9th century. Over time, artefacts were invented with specialized functions to aid humankind. The invention of the clock was an important milestone in the development of mechanical artefacts with the ability to measure or calculate. Some early clocks still function after 800 years of service!

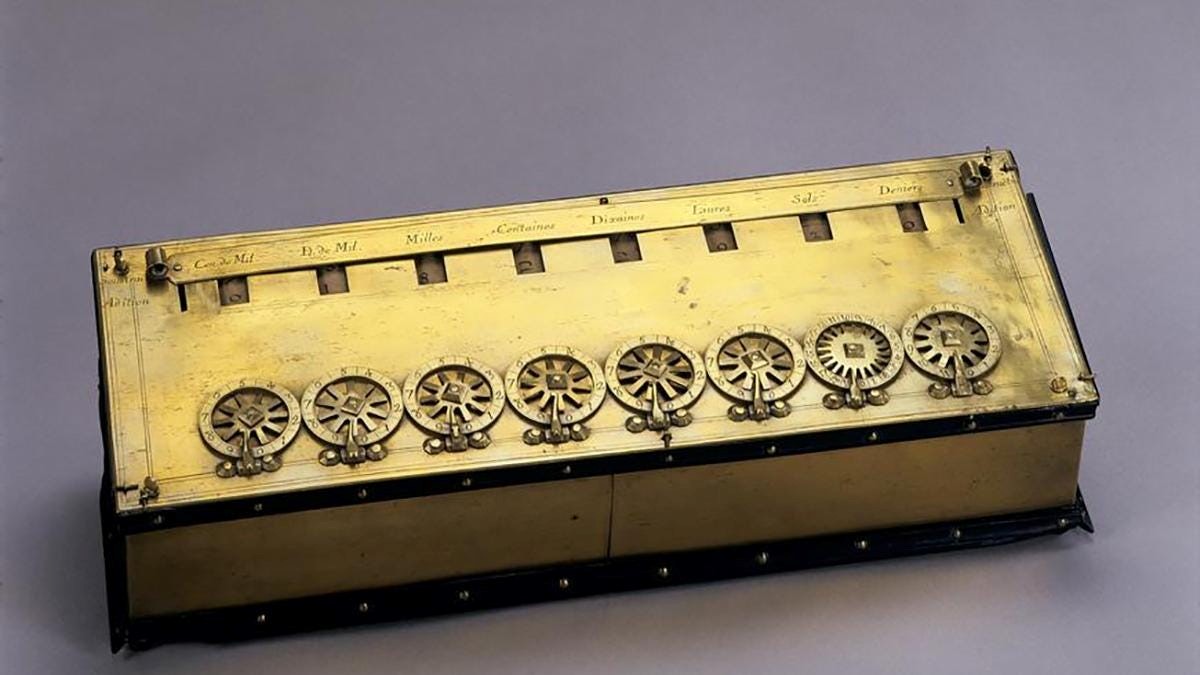

The first known mechanical calculator was designed and built in the early 17th century; Wilhelm Schickard is credited with designing a machine that could add and multiply; Blaise Pascal improved on Schickard’s design to produce a working prototype of a calculator in 1642.

Reference: Pascaline | Mechanical Calculator, Addition Device, Subtraction | Britannica

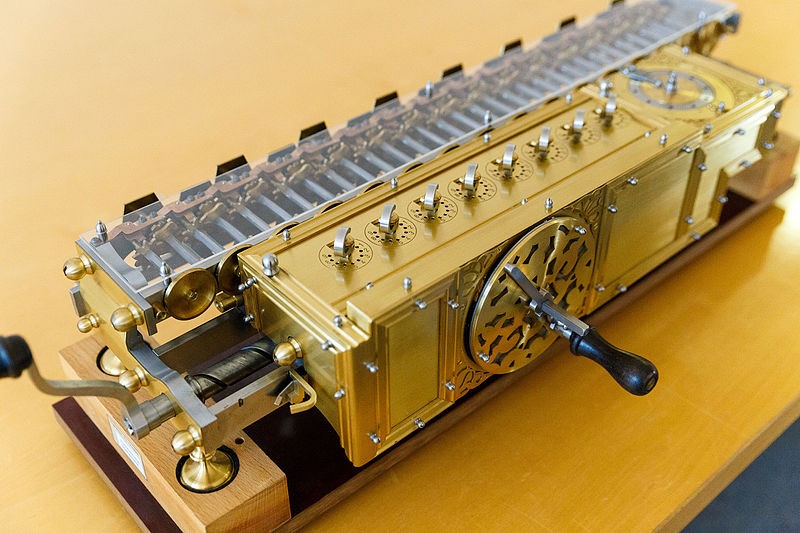

The philosopher and mathematician Gottfried Leibniz speculated that if human experience could be understood as mathematical thinking, which in turn could be represented by rational calculation, then the mechanisation of calculation could provide answers to all questions about reality. His cylindrical stepped reckoner, built at the end of the 17th century, was an attempt to realize this idea. Revolutionary at the time, its principles were incorporated in the design of mechanical computers for another 200 years.

Reference: Leibnitiana (gwleibniz.com)

Reference: Leibnitiana (gwleibniz.com)

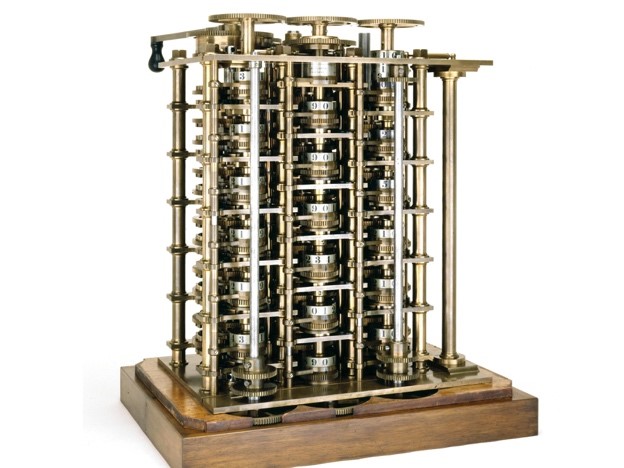

Charles Babbage, an English philosopher and mathematician, originated the idea of a digital programmable computer; his difference engine, which emerged in prototype form at the start of the 19th century, is credited as the first mechanical computer. Its design laid the foundations for the development of increasingly complex computing devices.

Reference: The Engines | Babbage Engine | Computer History Museum

In more recent times, technologies that seek to mimic aspects of human intelligence have increasingly become rich fields for both scientific research and speculative fiction. Most people around the world are already interacting, often unknowingly, with AI artefacts, often through online applications. Increasingly, with the explosive development of information technology, the likelihood of what is known as the Technological Singularity (or singularity), the point at which technology transcends human intelligence, has moved from the pages of science fiction to the realms of scientific plausibility. First coined in the 1950s by John von Neumann, the scientist and mathematician responsible for the design principles on which computer processors still operate, the singularity was seen as the logical consequence of the ever-accelerating advancement of technology. The dystopian future imagined in the singularity warns of the catastrophic risk to humanity posed by a hyper-intelligent autonomous technology bent on survival.

As educators, parents, students, families, colleagues, community members – members of humankind and world citizens – where does this troubling vision of the future leave us? Prof. Li is quite rightly calling for an investment of time, resources, and human effort to tackle the problem of AI. The key question of course is: to what end? For commercial interests? National interests? To win the global technological arms race? The answer depends very much on the perspective of the one asking the question.

In practical terms, the scale of investment represented by the AI challenge is staggering. For example, just the training of one new generation Large Language Model (LLM), on which contemporary AI tools rely, reportedly would require an investment of well over US$100 million. As noted by Prof. Li, the hardware needed for such a task is at a scale of complexity well beyond the reach of even top tier universities like Stanford. Educational institutions, lacking the technical/hardware capacity and business model to attract venture capital, can only play a supporting role in the development of cutting-edge AI tools. Private corporations can raise funds and invest, but will of course seek a return on their investment and will no doubt need to be rewarded for the risks borne in taking on these challenges. The skills needed are in high demand and receive rich compensation. Many with insight and foresight are keen to see the potential benefits of AI flowing through to all humankind, but at what cost? The forces of so-called rational self-interest are pushing the development and deployment of AI forward with inexorable urgency. Is the singularity inevitable?

As an educational institution, ISF sits in a difficult place when it comes to AI. Our institutional duty is to take responsibility for educating the next generation of leaders, thinkers, inventors, researchers – all of whom will draw heavily on the utilisation and mastery of AI tools to achieve success. At the same time, we are bound by the relatively modest means at our disposal for the creation and implementation of current, relevant, and effective AI-focused learning to discharge that responsibility. Setting aside the worst-case scenario of the singularity, Prof. Li quite accurately describes AI as ‘messy’; in fact, it is rapidly becoming a ‘wicked problem’ for humanity, one that is multi-dimensional, cannot be solved by single and simple solutions, is constantly and rapidly evolving, requires novel approaches and solutions, and has the capacity to exert a huge impact on the future of the world. As educators, we are trying to hit a swift-moving, rapidly evolving target with the aging tools currently at our disposal in schools. Increasingly, geography may also limit our access to some of the more effective applications and tools in this task. AI is a global problem worthy of our best efforts. It is indeed a challenge of global ‘moonshot’ proportions!

The ‘messy’ complexities and uncertainties of AI are part of the realities of this age. The future of the work our children will undertake, and the lives they will live, is at stake. This does not mean we should admit defeat, or wait passively for someone else to solve the problem for us. It does mean, however, that we need to apply the creativity, collaborative efforts, and valuable resources needed with prudence and wisdom in order to make some headway for our young learners. Working ‘smarter’ but not ‘harder’ sounds great, but innate cleverness will not solve our dilemma. Predictive anticipation is necessary. Stepping back from the problem to gain some insight at a distance is somewhat helpful in mapping its parameters. Ultimately, however, action is needed.

The new ISF strategic plan, to cover the years from 2024 to 2029, will embrace at an institutional level the task of educating our children about AI. There are three readily identifiable tiers or phases of AI-related learning that we can use to simplify the model for initial planning purposes: learning about AI (technical parameters, mathematical principles, constituent elements, capabilities, limitations, etc.), learning to use an AI (problem formulation, generating content, parsing results, etc.) and learning through AI as a tool to augment research and content creation. This learning will be designed and executed through our AI Laboratory, currently in planning. We will also engage expertise from many different fields to ensure our students have a more nuanced, multidimensional, and complete picture of the field of AI as it evolves and advances.

While we cannot predict the future, the lessons of the remote and recent past point to an unavoidable conclusion: our acceleration towards a future in which technologies, including AI, will shape every aspect of the shared human experience is increasing – for better or worse. If we cannot moderate the speed of this change, we must exert our best efforts to shape its direction and manage its impact. The ‘moonshot’ metaphor does seem oddly appropriate: an audacious and compelling challenge for the future. However, the risk we face is the not failure of a small team of astronauts to reach the moon; it will be our failure to take action in this technological tide that will imperil the future of humanity. High stakes, indeed!

Dr. Malcolm Pritchard

Head of School

Other references:

- The Singularity

- Computer Organization | Von Neumann architecture

- John von Neumann on Singularity – Dictionary of Arguments

- 1973 Rittel and Webber Wicked Problems.pdf (sympoetic.net)